Praktikum kubernetes hari ke 2

- deploy service pada cluster

Kita akan coba deploy service yang ringan dulu misalnya nginx menggunakan command berikut

root@master:~# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

root@master:~# kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 0/1 1 0 7s

root@master:~# kubectl describe deployment nginx

Name: nginx

Namespace: default

CreationTimestamp: Thu, 11 Jan 2024 03:16:35 +0000

Labels: app=nginx

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx

Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx-7854ff8877 (1/1 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 22m deployment-controller Scaled up replica set nginx-7854ff8877 to 1

Selanjutnya kita akan ekspose service nginx menggunakan opsi nodeport menggunakan perintah

root@master:~# kubectl create service nodeport nginx --tcp=80:80

service/nginx created

root@master:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15h

nginx NodePort 10.107.213.36 <none> 80:32034/TCP 8s

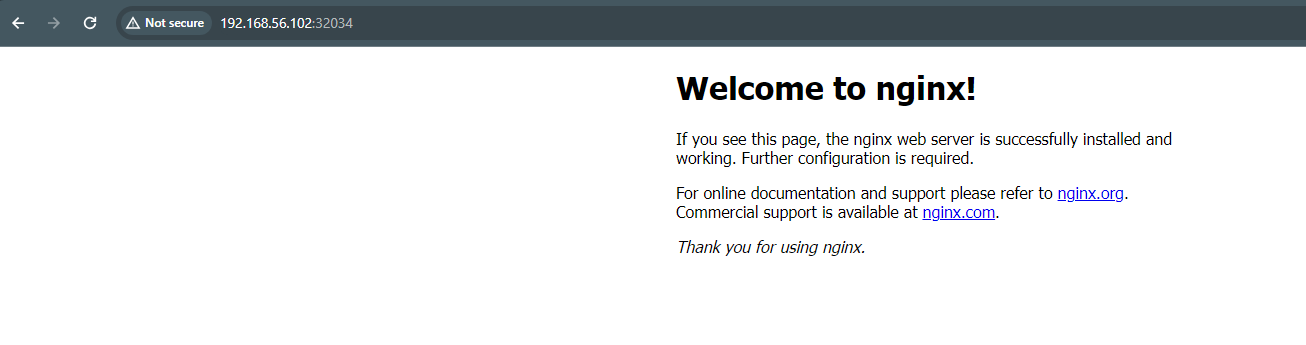

Setelah di ekspose service bisa diakses menggunakan ip node masing-masing, dalam hal ini kita coba menggunakan console via curl

root@master:~# curl master:32034

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

root@master:~# curl worker1:32034

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>Akses dari luar jaringan cluster menggunakan browser

Deploy service menggunakan custom manifest, buar file dengan nama nginx.yaml lalu paste script dibawah ini

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-nginx

labels:

app: test-nginx

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: test-nginx

template:

metadata:

labels:

app: test-nginx

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- test-nginx

topologyKey: "kubernetes.io/hostname"

containers:

- name: nginx

image: nginx:stable-alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

labels:

app: test-nginx

name: test-nginx

namespace: default

spec:

ports:

- nodePort: 30080

port: 80

protocol: TCP

targetPort: 80

selector:

app: test-nginx

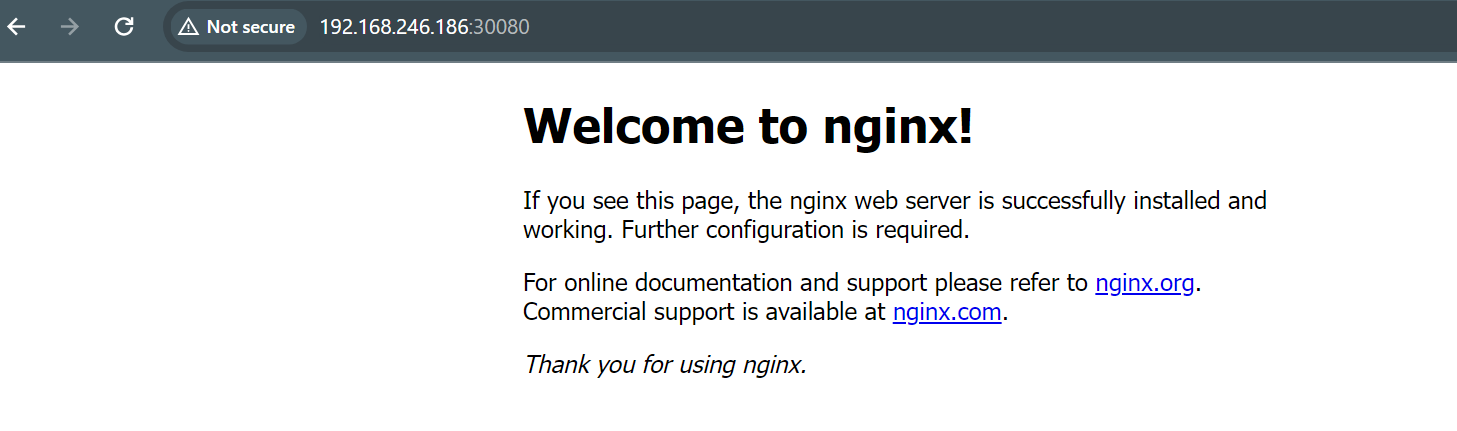

type: NodePortlalu deploy script tersebut menggunakan perintah

root@worker2:~# kubectl apply -f nginx.yaml

deployment.apps/test-nginx created

service/test-nginx createdcek pods menggunakan perintah berikut

root@worker2:~# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-nginx-5d44cfddf9-ds4s2 1/1 Running 0 5s 172.16.235.130 worker1 <none> <none>

test-nginx-5d44cfddf9-tmt9m 1/1 Running 0 5s 172.16.189.66 worker2 <none> <none>Untuk mengetahui port listen bisa menggunakan perintah berikut

root@worker2:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 54m

test-nginx NodePort 10.111.4.93 <none> 80:30080/TCP 5m4s

Ujicoba akses menggunakan ip : port serevr hostnya via browser

- Instalasi helm di masing-masing node

Helm adalah salah satu paket manager untuk deploy service di cluster kubernates

Pertama lakukan penambahan repository di masing2 server

curl https://baltocdn.com/helm/signing.asc | gpg --dearmor | sudo tee /usr/share/keyrings/helm.gpg > /dev/null

sudo apt-get install apt-transport-https --yes

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/helm.gpg] https://baltocdn.com/helm/stable/debian/ all main" | sudo tee /etc/apt/sources.list.d/helm-stable-debian.listSetelah itu lakukan update repository dan install paket helm

sudo apt-get update

sudo apt-get install helmupdate repository helm pada cluster kubernetes

helm repo add stable https://charts.helm.sh/stable- Install dan konfigurasi Ingress controller (nginx)

Lakukan deployment ingres controller menggunakan command berikut

root@master:~# kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.3.0/deploy/static/provider/cloud/deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission createdCek pods ingress yang sudah dideploy dengan command berikut

root@master:~# kubectl get pods --namespace ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-7qblj 0/1 Completed 0 2m24s

ingress-nginx-admission-patch-2tvlt 0/1 Completed 0 2m24s

ingress-nginx-controller-fc8d7d749-tdj25 0/1 Running 0 2m24s

root@master:~# kubectl get service ingress-nginx-controller --namespace=ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.101.6.15 <pending> 80:31413/TCP,443:30907/TCP 2m42sLakukan konfigurasi ingress terhadap service yang sudah kita buat sebelumnya

Ujicoba service yang dimasukkan ke loadbalancer

- Install dan konfigure matric server untuk melihat kondisi resource cluster

Lakukan instalasi menggunakan manifest YML berikut

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yamlCek service apakah sudah running

root@worker2:/home/barkah# kubectl get deployment metrics-server -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

metrics-server 0/1 1 0 7h55m

root@worker2:/home/barkah# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5dd5756b68-46vkp 0/1 Running 0 22h

coredns-5dd5756b68-k4cwj 0/1 Running 0 22h

etcd-master 1/1 Running 0 22h

kube-apiserver-master 1/1 Running 0 22h

kube-controller-manager-master 1/1 Running 0 22h

kube-proxy-hhtwq 1/1 Running 0 22h

kube-proxy-tgrwb 1/1 Running 0 22h

kube-proxy-x2lmd 1/1 Running 0 22h

kube-scheduler-master 1/1 Running 1 22h

metrics-server-8857d6b7c-fs6kb 0/1 CrashLoopBackOff 152 (4m56s ago) 7h37m

metrics-server-fbb469ccc-vhz2m 0/1 Running 0 7h51mCek resource nodes menggunakan perintah

# kubectl top nodes

# kubectl top pod

# kubectl top pod -n kube-systemTroubleshoot

- Install dan konfigurasi kubernetes dashboard

Instalasi menggunkan manifest YML

root@master:/home/barkah# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

Warning: spec.template.metadata.annotations[seccomp.security.alpha.kubernetes.io/pod]: non-functional in v1.27+; use the "seccompProfile" field instead

deployment.apps/dashboard-metrics-scraper createdCek kondisi pods

root@master:/home/barkah# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-system calico-kube-controllers-5c5f98768f-j6xqx 0/1 Running 261 (5m21s ago) 22h

calico-system calico-node-2fkb9 1/1 Running 0 22h

calico-system calico-node-f7rlk 1/1 Running 0 20h

calico-system calico-node-m2n2c 0/1 Running 0 20h

calico-system calico-typha-5fb8b56d64-d2glq 1/1 Running 0 22h

calico-system calico-typha-5fb8b56d64-q8vbv 1/1 Running 0 22h

calico-system csi-node-driver-4f5m8 2/2 Running 0 22h

calico-system csi-node-driver-bns4w 2/2 Running 0 22h

calico-system csi-node-driver-pvc2c 2/2 Running 0 22h

default nginx-7854ff8877-pcqwv 1/1 Running 0 7h23m

kube-system coredns-5dd5756b68-46vkp 0/1 Running 0 22h

kube-system coredns-5dd5756b68-k4cwj 0/1 Running 0 22h

kube-system etcd-master 1/1 Running 0 22h

kube-system kube-apiserver-master 1/1 Running 0 22h

kube-system kube-controller-manager-master 1/1 Running 0 22h

kube-system kube-proxy-hhtwq 1/1 Running 0 22h

kube-system kube-proxy-tgrwb 1/1 Running 0 22h

kube-system kube-proxy-x2lmd 1/1 Running 0 22h

kube-system kube-scheduler-master 1/1 Running 1 22h

kube-system metrics-server-7c94c94795-mcngk 1/1 Running 0 6m38s

kubernetes-dashboard dashboard-metrics-scraper-856cb79ffb-q8cj5 1/1 Running 0 33s

kubernetes-dashboard kubernetes-dashboard-79cf5b89bb-2hhv9 0/1 ContainerCreating 0 33s

tigera-operator tigera-operator-55585899bf-qjn4w 1/1 Running 0 22h

root@master:/home/barkah# kubectl -n kubernetes-dashboard get pods

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-856cb79ffb-q8cj5 1/1 Running 0 3m43s

kubernetes-dashboard-79cf5b89bb-2hhv9 1/1 Running 3 (45s ago) 3m43sTroubleshoot

Referensi

https://spacelift.io/blog/kubernetes-ingress

https://www.linuxtechi.com/how-to-install-kubernetes-metrics-server/

https://upcloud.com/resources/tutorials/deploy-kubernetes-dashboard

https://github.com/hub-kubernetes/kubeadm-multi-master-setup